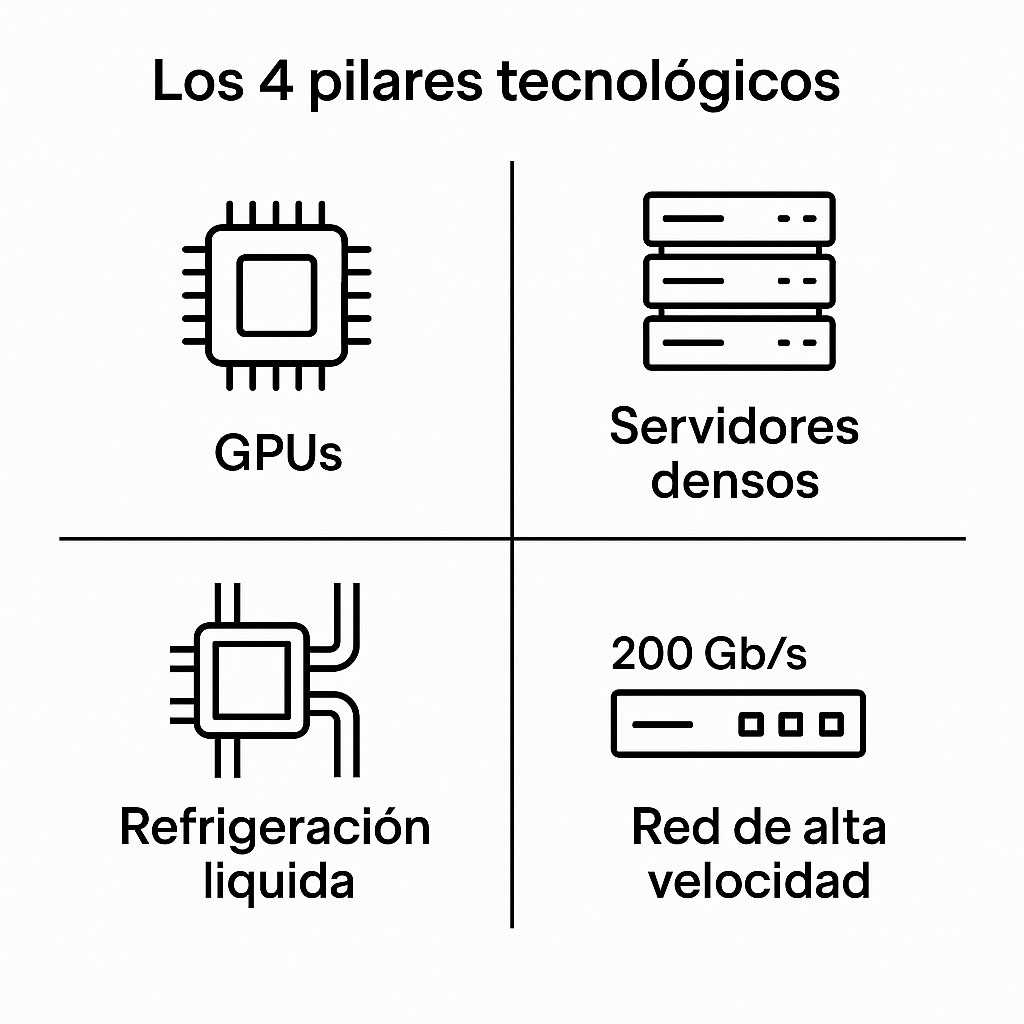

Current generative AI models—capable of handling hundreds of billions of parameters—have exponentially increased the computing demands on data centers and the corporate clouds that support them (Cloud for AI). Training and inferring these models without bottlenecks requires moving terabytes of data per second, dissipating more than 100kW per rack, and scaling computing capacity efficiently. This challenge can only be met by relying on four well-defined technological pillars:

- Latest generation GPUs, with HBM memory and NVLink/NVSwitch links.

- Ultra-dense servers, which maximize the use of each rack unit.

- Advanced liquid cooling, capable of operating with warm water to extract high thermal loads.

- This type of AI cloud requires complete integration between specialized hardware, efficient cooling, and high-speed connectivity to support next-generation models.

Throughout the article, we break down each of these pillars, explaining how they combine in practice and what real technologies make them possible.

Throughout the article, we break down each of these pillars, explaining how they combine in practice and what real technologies make them possible.

GPUs: The Engine of AI

Graphics processors lead the way in computing performance today thanks to their massive ability to perform floating-point operations (FLOPS) and move data at high speed. A single modern GPU offers up to 288GB of HBM3e memory and over 8TB/s of internal bandwidth, figures impossible to achieve with any conventional CPU.

Cards like the NVIDIA H100/H200 or the new AMD Instinct MI350 data-contrast=”auto”> combine this memory with tens of thousands of cores and high-speed links like

NVLink and NVSwitch, which allow multiple GPUs to function as one. This frees up traditional CPUs for coordination tasks, while the GPUs focus on intensive computation. Manufacturers such as ASUS and Gigabyte have already adapted their servers to take advantage of these capabilities. For example, nodes with up to eight GPUs interconnected by NVSwitch can deliver more than 4PFLOPS in FP16 precision, which is 30 times the power of a conventional CPU server rack.

To take advantage of all this performance, the software must follow: libraries such as NCCL, GPUDirectRDMA and GPUDirectStorage allow data to flow between GPUs, network and storage without going through the system RAM, reducing latencies to the microsecond level.Dense Cloud Servers for AI: More Power in Less Space

The key metric in an AI cluster is not just raw power, but how much of that power fits in each rack (FLOPS/U). To achieve extreme densities, servers with multiple high-performance GPUs are clustered together, precisely positioned to maximize space utilization, cooling, and internal connectivity.

A well-optimized 42U rack might include:

- 4–6 8GPU servers, interconnected via PCIe Gen5 buses or NVSwitch links.

- Network and management switches (200Gb/s), top mounted.

- Distributed power modules (48–54VDC busbar, exclusive to OCPV3 racks) and, depending on the overall design, also thermal control modules or external racks dedicated to shared cooling.

This type of architecture can achieve 20–25 FP16 PFLOPS per rack. However, the final performance will also depend on the communications software, which must be adapted to the GPU manufacturer:

- With NVIDIA: libraries NCCL and GPUDirectRDMA.

- With AMD: using RCCL and ROCmDirectRDMA.

- With Intel: libraries oneCCL with support in oneAPI/LevelZero.

Each ecosystem has its own optimized stack, so it’s a good idea to define the hardware platform before choosing the software environment.

Cloud Cooling for AI: Air or Liquid?

Before designing a cluster, you need to decide how to dissipate the 20–40kW that an AI rack can generate. There are two main approaches:

- Air cooling: This is the simplest and uses high-flow fans. It’s usually sufficient for small installations (up to three racks).

- Liquid cooling (LC): more efficient starting at 20kW/rack. It uses glycol water to extract heat directly from the GPUs and CPUs using cold-plates.

Within LC there are two modalities:

- Liquid-to-Air (L2A): Hot water is cooled in an exchanger inside the rack, dissipating the heat as warm air.

- Liquid-to-Liquid (L2L): The water transfers its heat to an external circuit, completely removing the heat from the technical room.

These solutions are not mutually exclusive. In many cases, they are combined: for example, liquid cooling for GPUs and air cooling for disks and secondary components.

The LC can be integrated into the rack itself (in-rack CDU) or managed from an external cabinet serving multiple racks (remote CDU).

Important Note:NVIDIA Blackwell (B100/B200) generation

requires liquid cooling as it exceeds 1kW per GPU. Previous generations (like the H100/H200) can still work over the air if you have a well-conditioned room.

200Gb/s Networks: Moving Data Without Bottlenecks

To train large models, each GPU can send or receive up to 50–100GB per iteration. On a 32-node cluster, this generates several terabits per second of traffic. To avoid bottlenecks, each GPU should have its own 200Gb/s network-attached NIC, connected to the network and aligned with its NUMA domain.

How the Rack Network Is Structured in an AI Cloud

- GPU–NIC connection: Each GPU connects to its own 200Gb/s NIC (InfiniBand or Ethernet).

- Switch leaf: The NICs connect to switches at the top of the rack. These leaf switches act as the entry point to the cluster network.

- Switch spine: Each leaf connects to several spines, backbone switches that interconnect the different leafs. Thus, any GPU can communicate with another in a maximum of two hops: GPU → leaf → spine → leaf → GPU.

- Scaling: This leaf-and-spine topology works well for up to 256 nodes. For larger deployments, move to more complex structures such as dragonfly+.

Rule of Thumb:

The total network bandwidth of your rack should be similar to the aggregate memory bandwidth of your GPUs, so as not to waste computing power.

Hardware examples (200Gb/s):

- NICs: NVIDIA ConnectX‑7, AMD Pensando DPU, Intel E810‑CQDA2

- Switches: NVIDIA InfiniBand QM9700, Broadcom Tomahawk6 (Ethernet)

These components support RDMA, a technology that allows GPUs to exchange data directly without going through the CPU, improving efficiency and reducing latency.

Conclusion: accelerate AI without improvising

Adopting advanced AI means transforming the infrastructure. It’s not enough to simply buy powerful servers: you have to design a coherent AI cloud, where computing, cooling, and networking work in harmony.

GPU servers from ASUS, Gigabyte, ASRock Rack, and Supermicro demonstrate how the ecosystem is already prepared to deliver solutions ready to train large-scale models. But deploying them successfully requires experience, precision, and practical knowledge.ViveRack, the line of high-performance solutions marketed by ibertronica, brings together all of these elements in proven configurations: certified GPU nodes, cooling distribution, 200Gb/s switching, and an architecture that is ready to grow.

👉 If your company is evaluating a qualitative leap in AI, we invite you to learn more about ViveRack configurations and discover how we can help you turn your data center into a true model factory.

You can contact us through the form available on our website to receive personalized advice.

How to Deploy Your Cloud for AI With VibeRack Racks New Ocp V3 Cabinets NVIDIA HGX: Open Platform Powering AI and HPC at Large Scale