The NVIDIA HGX platform has established itself as the de facto standard for training and deploying large-scale artificial intelligence (AI) models. With a combination of Hopper (H100/H200) and, starting in 2024, Blackwell (B200/B300) GPUs, a 1.8 TB/s NVLink 5 interconnect, 800 Gb/s InfiniBand and Ethernet networks, BlueField-3 DPUs, and an open, community-defined OCP format, HGX delivers the performance, scalability, and efficiency required by generative models with trillions of parameters.

The Computational Challenge of Modern AI

Language and vision models now have hundreds of billions of parameters, requiring infrastructure capable of moving large amounts of data without bottlenecks. With HGX, NVIDIA brings together everything needed for data centers to scale from a few to thousands of GPUs working together into a single “building block.”

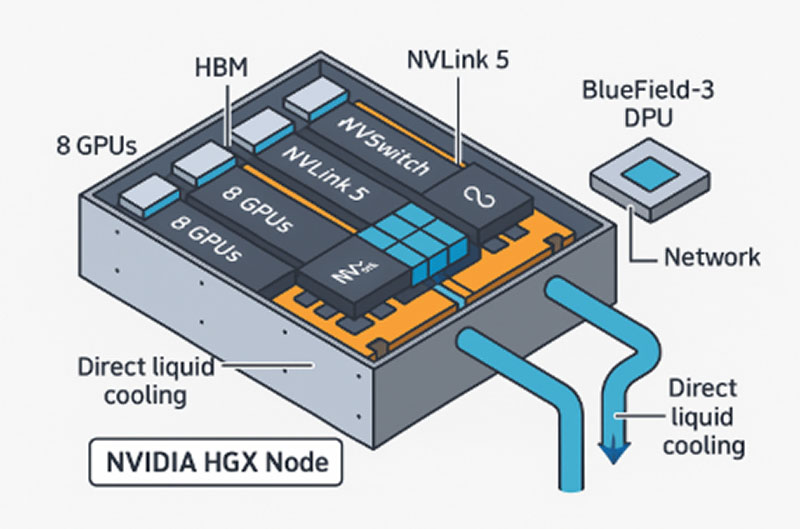

How is an NVIDIA HGX node organized?

An HGX node is like a super module that sits inside a rack and brings together, in a single chassis, everything needed to train and serve AI models without bottlenecks. Its architecture is divided into five distinct blocks:

- GPU Group

• 4 to 8 Hopper (H100/H200) or Blackwell (B200/B300) GPUs mounted on an SXM socket.

• Each GPU incorporates HBM memory (up to 141GB per chip on the H200) to support massive datasets. - NVLink5 Internal Network + NVSwitch

• Up to 1.8TB/s of aggregate bandwidth between GPUs, double the previous version.

• Allows all eight chips to share memory and act as a single logical GPU. - 800Gb/s External Network

• ConnectX-8 cards and Quantum-X800 (InfiniBand) or Spectrum-X (Ethernet) switches.

• Connect multiple HGX nodes to form clusters of thousands of GPUs with latencies below 2µs. - DPUBlueField-3

• Accelerates zero-trust networking, storage, and security operations without stealing cycles from the GPUs. • According to NVIDIA, it frees up to 30% of compute capacity that would otherwise be occupied by I/O tasks. - Thermal and Power Management

• Maximum consumption of 6.8kW per node, direct-to-chip liquid cooling, and 54V power backed by the Open Compute Project (OCP).

Because the HGX form factor is published in OCP, any manufacturer can add x86 or Arm CPUs, additional storage, or custom interconnects, while maintaining full compatibility with CUDA and the NVIDIA AI Enterprise suite.

HGX solutions available today

- Gigabyte– G593-SD0 (HGXH100 8-GPU) and G59x-B (HGXB200) servers; Liquid version G593-SD0-LAX1, in mass production.

- ASUS– ESC N8A-E12 (HGXH100 8-GPU with EPYC 9004) and ESC N8-E11V (HGXH200/H100); global availability from late 2024.

- ASRock Rack– 6U8X-EGS2 H200 (HGXH200 8-GPU) platform now shipping; 6U8X‑GNR2 B200 announced with shipments expected in the second half of 2025.

- Supermicro– SYS‑821GE‑TNHR (HGXH100/H200 8-GPU) and new liquid-cooled ORv3 chassis with HGXB200 in pre-production (pre-orders starting in Q4 2025).

Collectively, we estimate over 300,000 HGX nodes (≈2.4 million GPUs) in production worldwide, with our four partners—Gigabyte, ASUS, ASRock Rack, and Supermicro—covering nearly all of our customer configurations.

Key Benefits

- Linear Scalability: Adding nodes increases performance by multiplying without rewriting software.

- Flexibility: Supports x86, Arm Grace CPUs, or custom accelerators using NVLink Fusion.

- Efficiency: More performance per watt than PCIe solutions thanks to NVLink and HBM3e memory.

- Security: BlueField-3 enables micro-segmentation and end-to-end encryption with no impact on latency.

- Open Ecosystem: Any manufacturer can offer variants tailored to specific needs (GPU count, cooling, storage).

Advantages of HGX over DGX

Unlike NVIDIA DGX systems, which are sold as closed, off-the-shelf solutions, the HGX standard offers critical advantages for large-scale or specialized deployments:

- Full Modularity – Choose from 4 to 8 GPUs per node (or 72 GPUs in NVL72 configurations) and combine them with your preferred CPU (x86, Grace, or Arm from other vendors).

- Linear Scalability – The NVLink5 + NVSwitch interconnect allows you to grow from one node to thousands while maintaining memory coherence and without rewriting software.

- Ultra-High-Speed Networking – Supports InfiniBand and 800Gb/s Ethernet (and evolving to 1.6 Tb/s), while DGX is limited to the factory-installed fixed topology.

- Flexible Cooling– Air, direct-to-chip liquid, or immersion; Adapts to the thermal density and policies of the data center.

- Cost-Optimized– Only the necessary modules are purchased and existing racks and power supplies are reused, reducing the cost per GPU compared to a closed DGX server.

- Open Ecosystem (OCP)– The public specification accelerates innovation for OEMs, ODMs, and clouds, avoiding vendor lock-in.

- Optional BlueField-3– Adds DPUs for programmable networking and zero-trust security, something not always present in the DGX line.

Conclusion

NVIDIA HGX brings together the raw power of the latest GPUs, 800Gb/s networking, and a complete software ecosystem into a single open platform. Its massive adoption and presence on the roadmaps of companies like Google, Oracle, and Microsoft indicate that it will continue to be the cornerstone of future advancements in generative AI and HPC.

Want to find out which HGX solution best suits your project?

Fill out this short contact form and our team will send you a personalized, no-obligation proposal within 24 hours.

| Cloud for AI: The technology behind the cloud | How to Deploy Your Cloud for AI With VibeRack Racks | New Ocp V3 Cabinets |

|

|

|