Introduction: AI Breaks the Limits of Traditional Racks

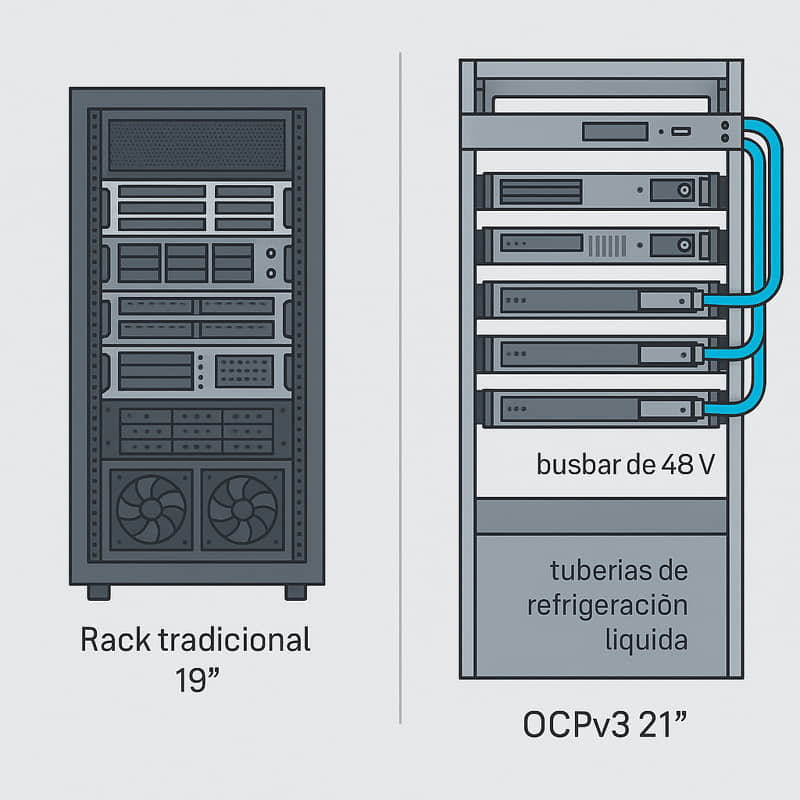

The rise of language and vision models—from ChatGPT to enterprise reinforcement learning systems—has driven up the demand for GPUs and accelerators. Each node now exceeds 1,000W, and a single cluster can require more than 1MW of thermal power. 19″ EIA-310 racks, designed for conventional workloads, are not prepared for this density or for the liquid cooling required by the new wave of Cloud for AI. Faced with this bottleneck, Open Rack v3 (OCP v3 Rack Cabinets) emerges, the most ambitious evolution of the open hardware project launched in 2011.

What is the Open Compute Project?

The Open Compute Project (OCP) is a global open hardware community founded by Meta (then Facebook) in 2011. It has been joined by giants such as Google, Microsoft, Intel, AMD, NVIDIA, Dell, Inspur, and more than 300 academic organizations and supply chain providers.

Its mission is to design and share specifications for efficient, flexible, and scalable hardware—servers, storage, networking, power, and cooling—with open licenses. This way, any manufacturer can produce the designs and any operator can adopt them without being locked into a single supplier, accelerating innovation and reducing costs in a Cloud for AI.

Projects are structured into Project Groups (Server, Storage, Networking, AI Hardware, Cooling, Rack & Power, Sustainability), where members collaborate by publishing CAD drawings, bills of materials, and royalty-free firmware.

Additionally, supercomputing centers and universities are participating with contributions and real-world use cases.

In short, OCP is not just a rack standard, but an open ecosystem aimed at democratizing data center infrastructure and enabling a sustainable, high-performance Cloud for AI.

What’s New in OCPv3 Rack Cabinets for AI

21″ Chassis and High-Efficiency 48V Bus

OCPv3 expands the width of traditional cabinets to 21″ (≈15% wider than the classic 19″) to optimize airflow and provide space for liquid cooling tubing. The big news, however, is centralized power distribution:

- No PSU per server (Servers without a power supply!!): OCP sleds are inserted with a blind-mate connector that fits directly into a 48V busbar that runs along the back of the rack.

- Hot-swappable power modules: Conversion from AC mains to 48V is performed by removable modules located at the top or bottom of the cabinet. These power modules, which act as centralized power supplies for all nodes in the cabinet, are grouped in N+1/N+N configurations and can be hot-swappable without stopping the nodes.

- Integrated monitoring: Each module sends current, voltage, and temperature data to the rack’s BMC, allowing consumption to be orchestrated and overloads to be prevented.

This architecture eliminates dozens of small redundant PSUs, reducing conversions and increasing efficiency. In addition, the HPR (High-Power Rack) variant reinforces the busbar to deliver up to 92 kW per rack, sufficient for next-generation GPU clusters in an AI Cloud.

Liquid cooling: hybrid or direct, your choice

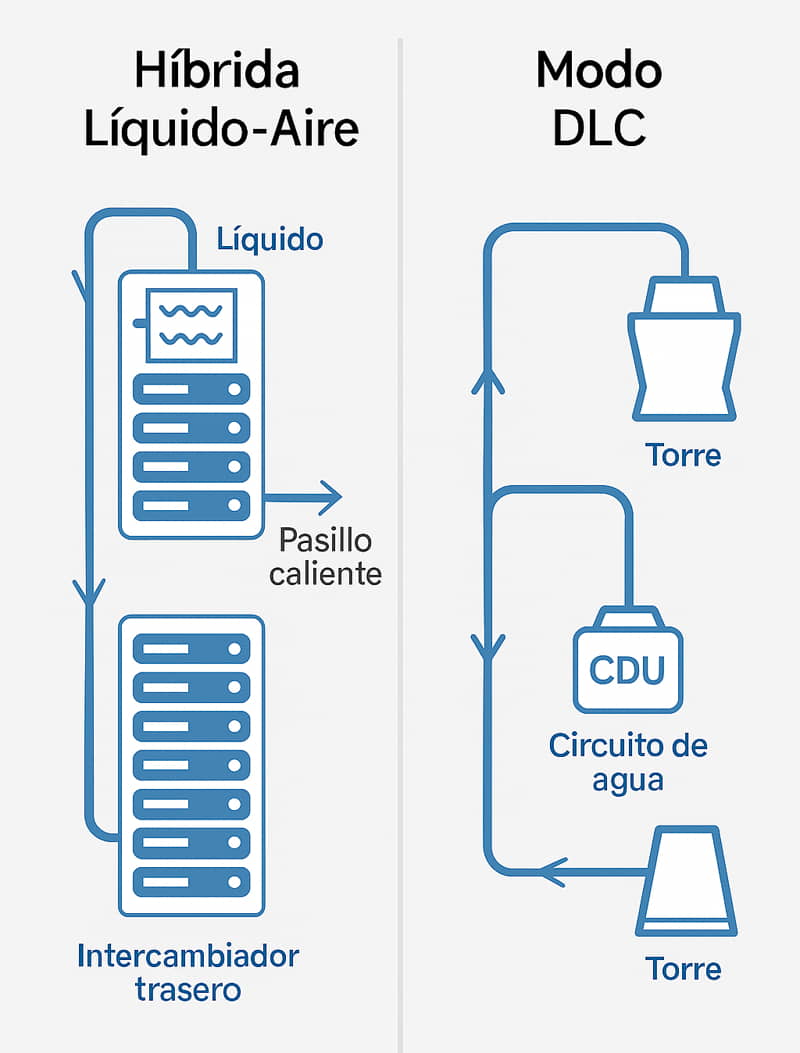

Before talking about pumps and pipes, it’s worth clarifying that there are two main ways to cool an OCPv3 rack:

- Hybrid mode Liquid-to-air cooling

The liquid collects heat inside the server and releases it into a heat exchanger in the rear door—or in a side “sidecar”—which transfers it to the hot aisle air. This way, you can continue using your existing HVAC system.

Advantages: It leverages existing infrastructure, requires only low-pressure water, and easily reaches around 30kW per rack.

Limitations: It falls short for very dense GPU clusters where power exceeds 40-50kW. - Liquid-to-liquid mode (Direct Liquid Cooling, DLC)

Here, all the heat travels through water: from the server, it passes to an internal technology loop (TCL) and, via a coolant distribution unit (CDU), to the building’s Facility Water Loop (FWL). The room air barely warms up.

Advantages: Dissipates up to 90kW per rack with an internal PUE of ≈1.1, maintains stable temperatures year-round, and prepares the room for future GPUs of >1kW each.

Requirement: Connection to a water loop or external cooling tower.

What’s inside the rack?

- Server modules with plates that touch the CPU, GPU, and memory.

- Quick-connect manifolds that are drip-free: allow a server to be removed or inserted without leaks or tools.

- Smart CDU with redundant pumps and filters that regulate flow, pressure, and temperature in real time.

The logic is simple: start in hybrid mode if your data center is still 100% air and evolve to DLC when you increase density. OCPv3 makes the transition easy: the same rack supports both schemes; just plug in the water loop and, if desired, remove the liquid-air heat exchanger.

Integrated power management and telemetry

The new Power Monitoring Interface (PMI) extends the legacy PMBus to aggregate all current, voltage, and temperature sensors for the power modules, the busbar, and each sled into a single I²C channel. This flow is exposed via Redfish or SNMP to orchestration systems, enabling:

- Predictive load balancing: If a bus segment approaches 80% capacity, the firmware reassigns nodes in milliseconds before protections are triggered.

- Dynamic power capping per server to avoid simultaneous peaks when the GPU cluster enters the ramp-up phase.

- Predictive maintenance: By correlating consumption, coolant flow, and temperature, thermal leaks or degraded sources are detected before they impact production.

The hierarchical topology (shelf BMC + rack BMC) reduces telemetry traffic by up to 60% and simplifies Zero-Touch Provisioning in Cloud for AI deployments.

OCPv3 in our VibeRacks: A New Paradigm

OCPv3 represents a paradigm shift in the way we power and cool data centers, but it’s still in its early stages. We don’t know if it will become the next dominant standard; what we do know is that many manufacturers are starting to adopt it, and it opens the door to densities and efficiencies that previously seemed unattainable.

At Ibertrónica, we want you to have this option from day one, which is why we offer our VibeRacks in two flavors:

- Classic 19″ VibeRack – ideal if your room is designed for EIA-310 racks and you prefer to stick with standard air cooling.

- 21″ VibeRack OCPv3 – designed for organizations looking for maximum density, modularity, and, above all, the option of liquid cooling.

Conclusion

OCP v3 marks a turning point in AI infrastructure. If you want to discover how our VibeRacks can boost your AI Cloud with maximum efficiency and speed, contact us, and we’ll work together to design the solution that best suits your project.

OCPv3 lays the foundation for data centers ready for the next wave of generative AI. If your organization wants to build or renew an AI Cloud with maximum efficiency and scalability, our VibeRacks team can design a turnkey solution based on OCPv3, liquid cooling, and next-generation GPUs.

Contact us today and transform your infrastructure into the engine of your competitive advantage.

| Cloud for AI: The technology behind the cloud | How to Deploy Your Cloud for AI With VibeRack Racks | NVIDIA HGX: Open Platform Powering AI and HPC at Large Scale |

|

|

|