Artificial intelligence is transforming products and services across all industries. More and more companies are looking to develop their own AI solutions or intelligent platforms for third parties. Making this leap requires careful planning. Before investing in Ibertrónica’s VibeRack racks or other high-performance solutions (Cloud for AI), it’s worth considering several key aspects. Below is a practical checklist—designed for business and operations managers—that will help you align your AI investment with your company’s objectives.

Long-Term Cloud for AI Growth Plan

Define a growth vision before setting up your AI infrastructure. The computing power you need today won’t be the same as it will be a year from now. This plan will allow you to scale smoothly without falling short or overinvesting.

Ask yourself: – What volume of AI projects and data will you be managing now and in the future? Consider both current use cases and potential user or data growth in the coming months. – When will you need to expand capacity? Set thresholds that indicate when to expand. For example: If the GPUs exceed a certain percentage of constant usage for several months, we’ll add another node.

Having a phased plan prevents you from improvising and prepares you to justify investments as the business requires. Modular solutions like VibeRack make it easy to start small and scale in stages as demand grows.

Alignment with the business model

Every investment in infrastructure should answer the question: How will it generate value for the business? Make sure you align the technology with your revenue model:

- Identify AI use cases: Will your own infrastructure enhance existing products with intelligent features or enable new services? Perhaps you plan to offer predictive analytics to clients, implement a specialized chatbot, or market an API with your trained models.

- Define your value proposition and expected return: Decide whether AI will help you increase revenue (innovative offerings that attract customers) or reduce costs (by automating internal processes). For example, streamlining product development or accelerating data analysis translates into efficiency and a competitive advantage.

Inspiring fact: Companies that adopt AI achieve up to 2.5x more revenue growth and 2.4x more productivity than those that don’t (source: itmastersmag.com). Aligning AI with the core business can trigger a virtuous cycle of innovation and ROI.

Cloud Equipment Location and Environment for AI

Where you host your AI servers is critical. Before deciding, ask yourself if your current facilities can handle the power and thermal load the platform will require—and if that headroom will still be sufficient as you grow.

- Electrical Capacity: Does the room have separate power lines and amperage for the cluster’s peak power draw? A node with 8 GPUs runs around 3kW; a full rack can run over 25kW.

- Adequate Cooling: Make sure your HVAC equipment can handle the additional heat without compromising other areas. If you’re considering liquid cooling, confirm piping, a chiller, and space for heat exchanger ports.

- Location scalability: You can start at your headquarters or corporate data center and migrate to an external data center when thermal density or demand increases. Planning this route prevents future blockages.

- Data custody and regulations: If you handle sensitive information (healthcare, financial, etc.), check localization requirements (e.g., GDPR) and the IP protection of your models.

Location impacts costs, security, and performance. Racking on-premises offers complete control, but requires setup and maintenance. Colocation simplifies operation for a monthly fee. Choose the scenario that best balances power, cooling, compliance, and projected growth.

Operating Budget and Hidden Costs

Don’t forget operating costs:

- Power and Cooling: A server with 8 GPUs draws several kilowatts and generates a lot of heat.

- Personnel: Engineering hours to manage it (or external support if you don’t have your own team).

- Maintenance and Renewal: Replacing/upgrading components over time.

Planning for these expenses will help you keep your infrastructure profitable over the long term. Periodically compare operating expenses with the benefits achieved (savings compared to the cloud, improvements in development times, quality of results). Ideally, your own infrastructure should pay for itself through efficiency and the new opportunities it creates.

Cloud Security and Compliance for AI

Protect both hardware and data:

- Restricted physical access to racks (secure room with access control).

- Encryption and strong authentication to protect information.

- Isolated networks for AI if you handle particularly sensitive data.

- Regulatory Compliance: GDPR or other regulations for your industry.

- Contingency Plans: Backups and disaster recovery to ensure service continuity.

Cloud Infrastructure Maintenance and Support for AI

Your AI infrastructure will require ongoing maintenance:

- Preventive maintenance and monitoring: Filter cleaning, firmware updates, systems that alert you to overheating or performance drops.

- Vendor support: Ibertrónica can assist you with installation, parts replacement, and technical support for your VibeRack.

Diligent maintenance prolongs the life of your investment and ensures stable service for your AI initiatives.

Practical example: Start with an 8-GPU node

Consider an initial scenario: set up your first AI machine with a node that includes 8 high-performance GPUs.

- Significant computing power: With 8 GPUs in parallel, you can tackle demanding projects without resorting to the cloud.

- Initial simplicity: All GPUs communicate internally (via PCIe or NVLink) without complicated interconnection networks.

- Controlled and scalable investment: Allows you to test the return on AI on a small scale before committing more capital. Once you’ve proven your value, you can add more nodes as demand grows.

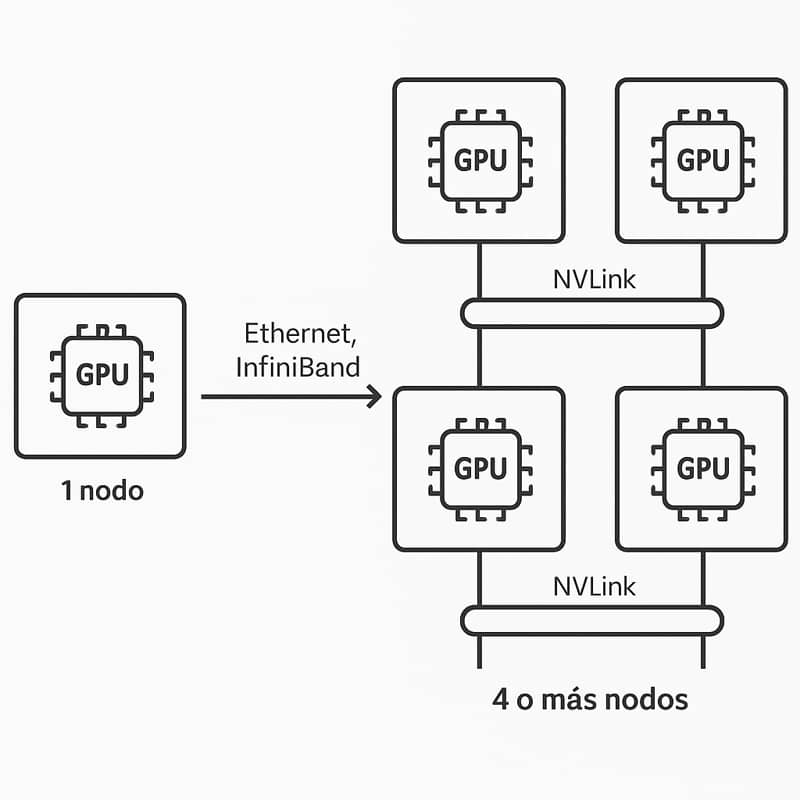

Scaling to Multiple Nodes: Networking and NVLink

If your first node falls short, when scaling from 1 to 2 to 4 nodes, keep in mind:

- Inter-node Communication: You’ll need a high-speed network (e.g., 100 Gbps Ethernet or InfiniBand) to avoid bottlenecks.

- Interconnect Technologies (NVLink): This is primarily useful when multiple GPUs are within the same server, allowing them to share data quickly. If you’re adding more GPUs to the same node, consider NVLink bridges. In multi-server clusters, focus on good networking first.

Scaling out multiplies your AI capabilities but adds complexity. Include in your growth plan when and how you will scale, and make sure you have the budget (new racks, GPUs, networking) and the necessary support ready.

Strategic benefits: the virtuous cycle of AI

Investing in your own AI infrastructure can:

- Improve products: Intelligent features attract and retain customers.

- Optimize operations: Cost savings and increased productivity.

More Half of companies that adopted AI report significant efficiency improvements (source: itmastersmag.com).

These benefits feed back into each other: the extra revenue and savings can be reinvested in new initiatives, creating a virtuous cycle of continuous growth.

Conclusion

After reviewing this checklist, it’s time to move from plan to action.

Remember, you don’t have to do it alone. Ibertronica can advise you at every stage, with experience and solutions (such as VibeRack racks) to create the ideal AI infrastructure for your business.

Contact us for personalized advice: we will help you design the solution tailored to your company and budget.

| Cloud for AI: The technology behind the cloud | New Ocp V3 Cabinets | NVIDIA HGX: Open Platform Powering AI and HPC at Large Scale |

|

|

|